Unit 2

Sorting:- Type of Sorting, Bubble Sort, Selection Sort, Insertion Sort, Binary Insertion Sort, Shell Sort, Quick Sort, Merge Sort, Heap, Heap Sort. Sorting in Linear Time:- Counting Sort, Radix Sort, Bucket Sort.

Q1 – A machine took 200 sec to sort 200 names, using bubble sort. in 800 sec, it can approximately sort?

400 names

800 names

700 names

1400 names

Ans – (1)

Explanation – First, we need the time complexity formula for bubble sort.

Bubble sort time complexity is n(n-1)/2.

This means that for sorting 200 names, comparisons are needed = 200(200 – 1)/2

= 19900 comparisons

So, this means in 200 sec, 19900 comparisons are made.

It means in 1 sec, 19900/200 = 100 approximately comparisons are made.

Similarly, for 800 sec, 800*100 = 80000 comparisons are made.

In 80000 comparisons, the number of sorted names is = n*(n – 1)/2

=> n(n-1)/2 = 80000

=> n = 400 approximately.

Q2 – Which of the following is useful in implementing quick sort?

Stack

Set

List

Queue

Ans – (1)

Explanation –

The iterative quick sort uses stack.

Everybody knows recursive stack which does not use stack.

The iterative stack that uses stack steps are

- Push the input element on the stack

- Partition with pivot.

- In stack, pop an element.

- If the range contains more than one element, push partition into a stack.

- Repeat it until stack becomes empty.

Q3 – A machine needs a minimum of 100 sec to sort 1000 names by quick sort. The minimum time needed to sort 100 names will be approximately. (ISRO CS 2015)

50.2 sec

72.7 sec

6.7 sec

11.2 sec

Ans – (3)

Explanation – The minimum time needed by quick sort is the best case. The time complexity of best case is nlogn comparisons.

If 1000 names are sorted, then comparisons will be

=> n*logn = 1000*log(1000) = 1000*9 = 9000 comparison.

If 100 names are sorted, the comparisons will be

=> n*logn = 100*log(100) = 100*6 = 600 comparisons.

If 9000 comparisons time is = 100 sec

Then, 600 comparisons time is = 100*(600/9000) = 6.66 sec

Q4 – Given 2 sorted lists of size ‘m’ and ‘n’ respectively. The number of comparisons needed the worst case by the merge sort algorithm will be (ISRO CS 2018)

m*n

max(m,n)

min(m,n)

m+n-1

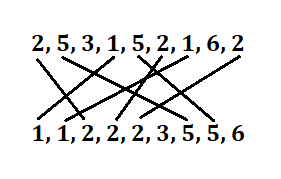

Ans – (4)

Explanation –

The number of comparisons needed in worst case is m + n -1.

Input 1 – 2, 6, 10, 16, 32, 33, 45

Input 2 – 5, 8, 12, 30, 33, 42, 50

Output list –

2 < 5, 2 comes in output list.

6 > 5, 5 comes in output list.

6 < 8, 6 comes in output list.

10 > 8, 8 comes in output list.

…

…

45 < 50, 45 comes in output list.

50 comes in output list. (No comparison)

So, m + n – 1 comparison are needed.

Q5 – The maximum number of comparisons needed to sort 9 items using radix sort is (assume each item is 5 digit octal number) (UGC NET CSE July 2018)

45

72

360

450

Ans – (3)

Explanation –

The maximum number of comparison is the worst case of time complexity of radix sort.

Items = 9

Octal number = 8

Digits = 5

Number of comparisons = items * Octal number * digits

Number of comparisons = 9*8*5 = 360

Q6 – In Randomized quick sort, the expected running time of any input is

O(n)

O(n2)

O(n*logn)

None of these

Ans – (3)

Explanation –

If you are searching for a time complexity of a randomized algorithm, then you only get expected running time, not best, average or worst-case running time.

In randomized quick sort, the pivot is random. Suppose input is – {43, 54, 12, 1, 70, 100, 86} and you pick a random pivot 50. In this case the expected running time is O(nlogn).

Q7 – For __________ insertion sort beats merge sort

n ≥ 43

n ≤ 43

n ≤ 23

can’t say

Ans – (2)

Explanation –

There is a question in T. H. Coreman in exercise of Chapter 1 in which we are comparing insertion sort with merge sort. Insertion sort runs in 8n2 steps, while merge sort runs in 64nlgn steps.

So, insertion sort and merge sort generally runs in 8n2 and 64nlgn steps, we remove the constant and call the time complexity as O(n2) and O(nlgn).

-> 8n2 ≤ 64nlgn

-> n ≤ 8lgn

-> n ≤ 43

Q8 – The time complexity of counting sort is

O(nlogn)

O(n)

O(n2)

O(n2logn)

Ans – (2)

Explanation –

Counting sort time complexity is linear. The time complexity is O(n+k) where k is constant.

There are three sorts which sort in linear time.

- Counting Sort

- Radix Sort

- Bucket Sort

https://compgeek.co.in/counting-sort/ to know more.

Q9 – The time complexity of heap sort is

O(nlogn)

O(n)

O(n2)

O(n2logn)

Ans – (1)

Explanation –

Suppose there are n nodes in a max-heap.

(i) The deletion of root and heapify the tree will take logn time.

(ii) Then, heap contains n-1 nodes, so the deletion of the second node and heapify tree will take log(n-1) time.

(iii) Then, heap contains n-2 nodes, so the deletion of the third node and heapify tree will take log(n-2) time.

(iv) After performing n-2 deletions, the second last item will take log 2 time.

(v) After performing n-1 deletions, the last item will take O(1) time.

-> Sum of all operations = log(n) + log(n-1) + log(n-2) + … + log2 + 1 = log(n!) = n*log(n) – n + log(n)

-> The time complexity is after taking higher order term is O(n*log(n)).

https://compgeek.co.in/heap-sort/ to know more.

Q10 – A sorting algorithm that examines adjacent elements in a list and swaps them if they are not in the desired order is referred to as a.

Insertion Sort

Bubble Sort

Quick Sort

Merge Sort

Q11 – The term used to describe a sorting algorithm that repeatedly traverses a list, swapping the first element with any element that is less than it, and then proceeds with the next first element is known as:

Insertion Sort

Bubble Sort

Selection Sort

Merge Sort

Ans – (3)

Explanation – The selection sort uses minimum element in the list and places it in the first position if the sorting is of the form non-decreasing.

Q12 – A sorting algorithm that applies the concept of a binary tree, where any given number is greater than or equal to all the numbers in its subtree, is commonly known as:

Heap Sort

Selection Sort

Binary Sort

None of this

Ans – (1)

Explanation – The root node value in Max-heap is greater than or equal to every child node value.

If i variable represents the index, then a[i] ≥ a[2i+1] & a[2i+2]

Q13 – The sorting algorithm that does not have a worst-case running time of O(n^2) among the following options is

Quick Sort

Bubble Sort

Insertion Sort

Merge Sort

Ans – (4)

Explanation – Quick sort has worst case running time of O(n2).

Q14 – Which of the following is the stable sorting method?

Binary insertion sort

Insertion sort

Heap sort

Shell sort

Ans – (2)

Explanation – Stable Sort – Suppose before sorting, there are two numbers (ai and aj) same in the input and suppose i and j are their indexes, and i comes before j, then after sorting in the output, if ai comes before aj then you can say, it is a stable sort.

Q15 – A binary tree that is complete and satisfies the property where the value of each node is greater than or equal to the values of its child nodes is commonly referred to as a

Binary Tree

AVL tree

Red-Black Tree

Heap

Ans – (4)

Explanation –

The root node value in Max-heap is greater than or equal to every child node value.

If i variable represents the index, then a[i] ≥ a[2i+1] & a[2i+2]

Q16 – The sorting algorithm that requires the minimum number of comparisons when the items are initially in reverse order and the maximum number of comparisons when the items are in order is the

Insertion Sort

Selection Sort

Binary insertion Sort

Heap Sort

Ans – (3)

Explanation –

Selection Sort still performs a full pass of comparisons, leading to the maximum number of comparisons.

https://compgeek.co.in/binary-insertion-sort/ to know more

Q17 – What best described sorting?

Accessing and processing each record exactly once.

Finding the location of the record with a given key.

Arranging the data in some given order.

Adding a new record to the data structure.

Ans – (3)

Explanation –

Sorting – Arrangement of elements in a non-decreasing or non-increasing order.

https://compgeek.co.in/types-of-sorting/ to know more

Q18 – The insertion sort time complexity is

n2

n + p

n

np, (where p is the number of inversions)

Ans – (2)

Explanation –

If n+p is not in the option then you have to choose n for the best case and n2 for the average and worst case.

Q19 – Binary insertion sort worst-case time complexity is

O(n)

O(nlogn)

O(n2)

O(√n)

Ans – (2)

Q20 – A tree is referred to as meeting the specified condition when each of its nodes holds a value that is greater than any value in its left subtree and less than any value in its right subtree. This type of tree is commonly known as a

Max Heap

Binary Search Tree

Min Heap

Fibonacci Tree

Ans – (2)

Explanation –

A tree is referred to as meeting the specified condition when each of its nodes holds a value that is greater than any value in its left subtree and less than any value in its right subtree is commonly known as binary search tree.