Huffman coding is a widely used algorithm in the field of data compression. Huffman coding is primarily used for lossless data compression.

In lossless compression, the original data can be perfectly reconstructed from the compressed version, ensuring that no information is lost during the compression and decompression processes.

It is an example of a greedy algorithm that efficiently encodes data by assigning shorter codes to more frequent symbols.

.

Introduction

Huffman coding, invented by David A. Huffman in 1952, plays a crucial role in reducing the size of data for efficient storage and transmission.

It’s a variable-length prefix coding algorithm, meaning it assigns shorter codes to more frequent symbols. This ensures that commonly used symbols are encoded with fewer bits, reducing the overall size of the data.

.

Algorithm Of Huffman Code

- Make a leaf node for each character and also write down the frequency of each character in leaf node.

- Arrange the nodes in non-descending order of frequency value.

- Choose the first two nodes have the lowest frequency,

- Make a new internal node by joining the two nodes have lowest frequency.

- The frequency of this new internal node is the sum of the frequencies of the two previous nodes.

- Make the first node the left child of the newly generated node, and the other node the right child.

- Repeat Steps 2 to 6 until all of the nodes form a single tree.

The tree that was ultimately obtained is the Huffman Tree for the input.

.

How Huffman Code Works

Let’s consider a simple example with the string

“ABRA-KA-DABRA.”

First, we calculate the frequency of each character.

.

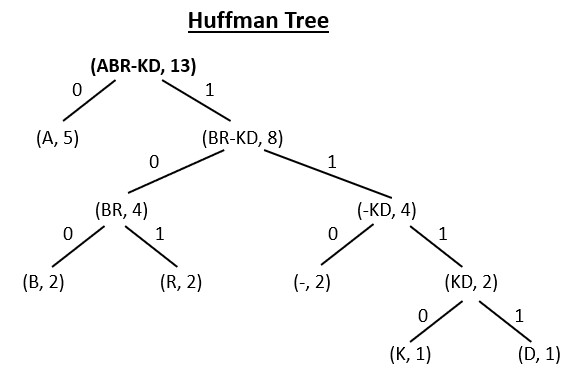

The shortest frequency is (K, 1) and (D, 1).

Then, we make two leaf nodes K and D and write its Frequency.

.

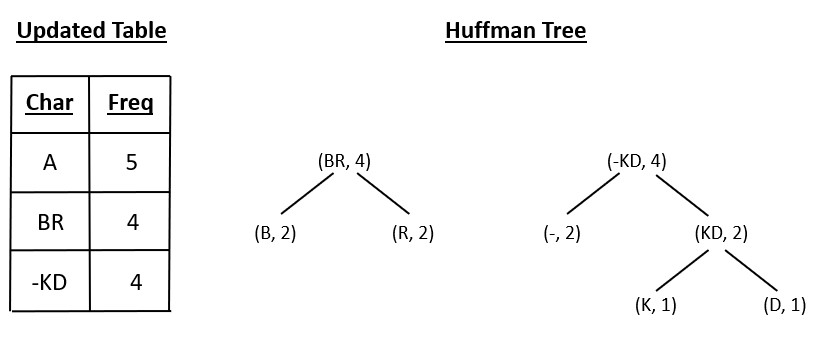

The Shortest frequency is (B, 2), (R, 2), (-, 2) and (KD, 2)

Then, we make two leaf nodes B and R and write its frequency.

Also, we make other leaf node (-) with internal node KD and write its frequency.

.

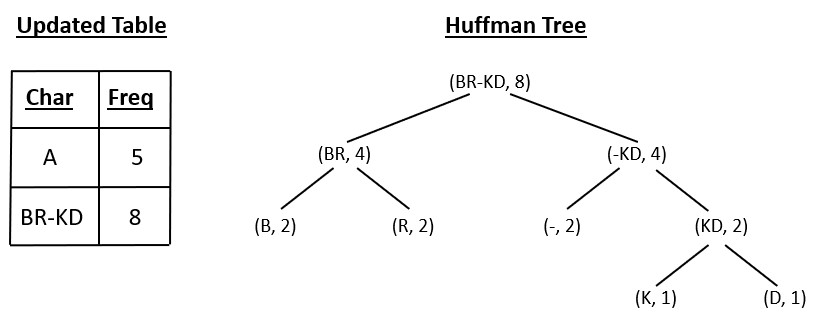

The Shortest frequency is (BR, 4) and (-KD, 4).

Then, we join two internal nodes BR and -KD and write its frequency.

.

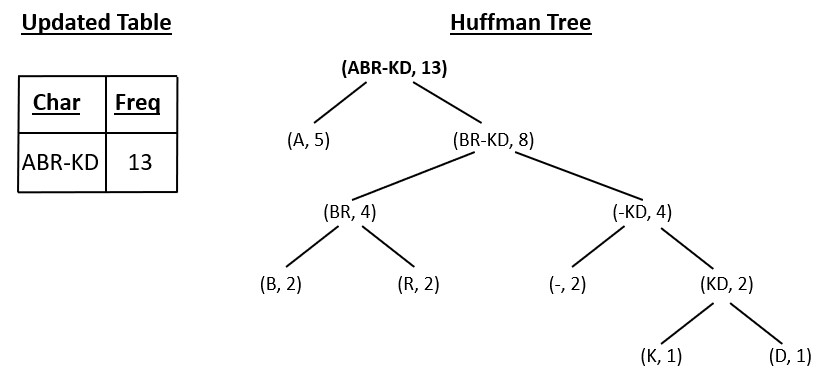

The Shortest frequency is (A, 5) and (BR-KD, 8).

Then, we join two internal nodes A & BR-KD and write its frequency.

.

We have constructed the Huffman tree and there is only 1 node left (ABR-KD) and its frequency is 13.

.

Now, in Huffman tree, Start from the root node.

Label the edge to the left child as 0 and the edge to the right child as 1. Repeat the process at both the left child and the right child.

Count the edge number of every leaf child.

A = 0

B = 100

R = 101

(-) = 110

K = 1110

D = 1111

So, the shortest form of ABRA-KA-DABRA is 0100101011011100110111101001010.

Without Encoding = 8*13 = 104 bits

With Encoding = 31 bits

.

Source Code for Huffman Code

// COMPUTER GEEK – compgeek.co.in

// Write a program for Huffman Tree

#include <stdio.h>

#include <stdlib.h>

// Define the node structure for Huffman tree

typedef struct Node

{

char data; // Character

int freq; // Frequency

struct Node *left; // Left child

struct Node *right; // Right child

} Node;

// Function to create a new node

Node* createNode(char data, int freq)

{

Node* newNode = (Node*)malloc(sizeof(Node));

newNode->data = data;

newNode->freq = freq;

newNode->left = NULL;

newNode->right = NULL;

return newNode;

}

int main()

{

int numChars;

// User will enter the number of characters

printf(“Enter the number of characters: “);

scanf(“%d”, &numChars);

// Create an array of nodes to store character frequencies

Node* nodes[numChars];

// User will enter character frequencies

for (int i = 0; i < numChars; i++)

{

char character;

int frequency;

printf(“Enter character %d: “, i + 1);

scanf(” %c”, &character); // Space before %c to consume newline

printf(“Enter frequency for character %c: “, character);

scanf(“%d”, &frequency);

// Create a new node for the character and frequency

nodes[i] = createNode(character, frequency);

}

// Construct the Huffman tree

while (numChars > 1)

{

// Find the two nodes with the lowest frequencies

int min1 = 0, min2 = 1;

if (nodes[min1]->freq > nodes[min2]->freq) {

int temp = min1;

min1 = min2;

min2 = temp;

}

for (int i = 2; i < numChars; i++)

{

if (nodes[i]->freq < nodes[min1]->freq)

{

min2 = min1;

min1 = i;

}

else if (nodes[i]->freq < nodes[min2]->freq)

{

min2 = i;

}

}

// Create a new internal node and update the array

Node* internalNode = createNode(‘$’, nodes[min1]->freq + nodes[min2]->freq);

internalNode->left = nodes[min1];

internalNode->right = nodes[min2];

// Remove the used nodes and add the new internal node

nodes[min1] = internalNode;

nodes[min2] = nodes[numChars – 1];

numChars–;

}

// Print the Huffman codes

printf(“Huffman Codes:\n”);

// A function to traverse the Huffman tree and print codes

void printCodes(Node* root, int code[], int top)

{

if (root->left)

{

code[top] = 0;

printCodes(root->left, code, top + 1);

}

if (root->right)

{

code[top] = 1;

printCodes(root->right, code, top + 1);

}

if (!root->left && !root->right)

{

printf(“%c: “, root->data);

for (int i = 0; i < top; i++)

{

printf(“%d”, code[i]);

}

printf(“\n”);

}

}

int code[numChars], top = 0;

printCodes(nodes[0], code, top);

return 0;

}

// COMPUTER GEEK – compgeek.co.in

// Write a program for Huffman Tree

#include <iostream>

#include <map>

#include <queue>

// Define the node structure for Huffman tree

struct Node

{

char data; // Character

int freq; // Frequency

Node* left; // Left child

Node* right; // Right child

Node(char data, int freq) : data(data), freq(freq), left(nullptr), right(nullptr) {}

};

// Comparison function for the priority queue

struct CompareNode

{

bool operator()(Node* const& a, Node* const& b)

{

return a->freq > b->freq;

}

};

// Function to construct the Huffman tree

Node* buildHuffmanTree(std::map<char, int>& frequencies)

{

std::priority_queue<Node*, std::vector<Node*>, CompareNode> pq;

for (auto const& pair : frequencies)

{

pq.push(new Node(pair.first, pair.second));

}

while (pq.size() > 1)

{

Node* left = pq.top();

pq.pop();

Node* right = pq.top();

pq.pop();

Node* internalNode = new Node(‘$’, left->freq + right->freq);

internalNode->left = left;

internalNode->right = right;

pq.push(internalNode);

}

return pq.top();

}

// Function to print Huffman codes

void printHuffmanCodes(Node* root, std::string code)

{

if (!root)

{

return;

}

if (root->data != ‘$’)

{

std::cout << root->data << “: ” << code << std::endl;

}

printHuffmanCodes(root->left, code + “0”);

printHuffmanCodes(root->right, code + “1”);

}

int main()

{

int numChars;

// User will enter the number of characters

std::cout << “Enter the number of characters: “;

std::cin >> numChars;

std::map<char, int> frequencies;

// User will enter character frequencies

for (int i = 0; i < numChars; i++)

{

char character;

int frequency;

std::cout << “Enter character ” << i + 1 << “: “;

std::cin >> character;

std::cout << “Enter frequency for character ” << character << “: “;

std::cin >> frequency;

frequencies[character] = frequency;

}

// Build the Huffman tree

Node* root = buildHuffmanTree(frequencies);

// Print the Huffman codes

std::cout << “Huffman Codes:” << std::endl;

printHuffmanCodes(root, “”);

return 0;

}

// COMPUTER GEEK – compgeek.co.in

// Write a program for Huffman Tree

import java.util.PriorityQueue;

import java.util.Scanner;

class Node

{

char data;

int freq;

Node left;

Node right;

public Node(char data, int freq)

{

this.data = data;

this.freq = freq;

left = null;

right = null;

}

}

class Huffman

{

public static Node createNode(char data, int freq) {

return new Node(data, freq);

}

public static void printHuffmanCodes(Node root)

{

int[] code = new int[256];

int top = 0;

printCodes(root, code, top);

}

private static void printCodes(Node node, int[] code, int top)

{

if (node.left != null) {

code[top] = 0;

printCodes(node.left, code, top + 1);

}

if (node.right != null)

{

code[top] = 1;

printCodes(node.right, code, top + 1);

}

if (node.left == null && node.right == null)

{

System.out.print(node.data + “: “);

for (int i = 0; i < top; i++)

{

System.out.print(code[i]);

}

System.out.println();

}

}

public static void main(String[] args)

{

Scanner scanner = new Scanner(System.in);

int numChars;

System.out.print(“Enter the number of characters: “);

numChars = scanner.nextInt();

Node[] nodes = new Node[numChars];

for (int i = 0; i < numChars; i++)

{

char character;

int frequency;

System.out.print(“Enter character ” + (i + 1) + “: “);

character = scanner.next().charAt(0);

System.out.print(“Enter frequency for character ” + character + “: “);

frequency = scanner.nextInt();

nodes[i] = createNode(character, frequency);

}

while (numChars > 1)

{

int min1 = 0, min2 = 1;

if (nodes[min1].freq > nodes[min2].freq)

{

int temp = min1;

min1 = min2;

min2 = temp;

}

for (int i = 2; i < numChars; i++)

{

if (nodes[i].freq < nodes[min1].freq)

{

min2 = min1;

min1 = i;

}

else if (nodes[i].freq < nodes[min2].freq)

{

min2 = i;

}

}

Node internalNode = createNode(‘$’, nodes[min1].freq + nodes[min2].freq);

internalNode.left = nodes[min1];

internalNode.right = nodes[min2];

nodes[min1] = internalNode;

nodes[min2] = nodes[numChars – 1];

numChars–;

}

printHuffmanCodes(nodes[0]);

}

}

# COMPUTER GEEK – compgeek.co.in

# Write a program for Huffman Tree

class Node:

def __init__(self, data, freq):

self.data = data

self.freq = freq

self.left = None

self.right = None

def create_node(data, freq):

new_node = Node(data, freq)

return new_node

def huffman_codes(root):

code = [0] * 256

top = 0

def print_codes(node, code, top):

if node.left:

code[top] = 0

print_codes(node.left, code, top + 1)

if node.right:

code[top] = 1

print_codes(node.right, code, top + 1)

if not node.left and not node.right:

print(f”{node.data}: {”.join(map(str, code[:top]))}”)

print(“Huffman Codes:”)

print_codes(root, code, top)

def main():

num_chars = int(input(“Enter the number of characters: “))

nodes = []

for i in range(num_chars):

character = input(f”Enter character {i + 1}: “)

frequency = int(input(f”Enter frequency for character {character}: “))

nodes.append(create_node(character, frequency))

while num_chars > 1:

min1, min2 = 0, 1

if nodes[min1].freq > nodes[min2].freq:

min1, min2 = min2, min1

for i in range(2, num_chars):

if nodes[i].freq < nodes[min1].freq:

min2 = min1

min1 = i

elif nodes[i].freq < nodes[min2].freq:

min2 = i

internal_node = create_node(‘$’, nodes[min1].freq + nodes[min2].freq)

internal_node.left = nodes[min1]

internal_node.right = nodes[min2]

nodes[min1] = internal_node

nodes[min2] = nodes[num_chars – 1]

num_chars -= 1

huffman_codes(nodes[0])

if __name__ == “__main__”:

main()

Time Complexity of Huffman Code

The time complexity of the Huffman coding algorithm depends on several factors.

- Frequency Counting – In the first step, the algorithm counts the frequency of each character in the input. The time complexity for frequency counting is O(n), where ‘n’ is the number of characters in the input.

- Building the Huffman Tree – When using a priority queue (min-heap), the time complexity to build the Huffman Tree is O(nlogn), where ‘n’ is the number of characters.

- Generating Huffman Codes – Once the Huffman tree is constructed, the algorithm traverses the tree to generate Huffman codes for each character. This step has a time complexity of O(n) since each character is processed once.

Time Complexity of Huffman Code is

è O(n) + O(nlogn) + O(n) = O(nlogn).

.

Space Complexity of Huffman Code

Since time complexity depends on many factors, the same applies to space complexity.

- Frequency Counting – The space complexity for this step is O(n), where ‘n’ is the number of unique characters in the input. In this step, you need to store the frequency counts of each character. Therefore, the space required is proportional to the number of unique characters.

- Building the Huffman Tree – If a priority queue is used, the space complexity for the tree is O(n), where ‘n’ is the number of unique characters. Additionally, you need to store internal nodes and their relationships. The total space used for the tree structure is also O(n).

- Generating Huffman Codes – As you generate Huffman codes for each character, you need space to store these codes. The space required for this step is O(n), where ‘n’ is the number of unique characters.

Space Complexity of Huffman Code is = O(n).

Advantages of Huffman Code

- Huffman coding is highly efficient in compressing data.

- It is used in various applications, including image and video compression, and in popular file compression formats like ZIP.

- can adapt to the input data frequencies.

- It minimizes the average length of the code, which ensures that no other encoding method can achieve better compression for the same input data.

- Fast Encoding and Decoding

- It eliminates redundancy by assigning unique codes to each symbol.

Disadvantages of Huffman Code

- Inefficient for datasets that contains only one alphabet, like “The quick brown fox jumps over the lazy dog“

- It does not include error detection or correction mechanisms.

Applications of Huffman Code

- Used in social media contents like WhatsApp and Facebook. But it is not meant for encryption; it’s for data compression.

- Used in optical discs, such as DVDs and Blu-ray discs.

- Data Transmission in IoT.

- Data Backup and Archiving.

- Dictionaries and Encyclopedias.

Test Yourself

Q1- Can a Huffman tree be unbalanced? If so, under what conditions might it happen?

A Huffman tree can become unbalanced in cases where the frequencies of characters are not distributed evenly. If certain characters have significantly higher frequencies than others, the tree may become skewed, which can result in inefficient encoding and longer codes for some characters.

Q2- Explain the concept of a “prefix-free” code in the context of Huffman coding. Why is it important?

In Huffman coding, a “prefix-free” code means that no code for one character is a prefix of the code for another character.

- This is important because it ensures that the encoded message can be uniquely decoded without any ambiguity.

- If a code were not prefix-free, it could lead to decoding errors.

Q3- Explain the time complexity of the Huffman coding algorithm.

The time complexity is O(n log n), where ‘n’ is the number of unique characters, due to the building of the Huffman tree using a priority queue.

Q4- Explain the role of Huffman coding in data transmission for the Internet of Things (IoT).

Huffman coding reduces the size of data packets, enabling faster and more efficient data transmission in IoT applications with limited bandwidth.

Q5- For a given set of characters and their frequencies, how would you calculate the average code length in bits for the Huffman code?

You can calculate the average code length by summing the product of each character’s frequency and its code length and then dividing by the total number of characters.

Q6- What is the primary purpose of Huffman coding?

Lossy data compression

Error correction

Lossless data compression

Data encryption

Ans – (3)

Explanation – Huffman coding is primarily used for lossless data compression.

Q7- Who invented the Huffman coding algorithm?

David A. Huffman

Alan Turing

Claude Shannon

Robert Fano

Ans – (1)

Explanation – David A. Huffman invented the Huffman coding algorithm in 1952.

Q8- What does the Huffman coding algorithm assign to more frequent symbols?

Longer codes

Lower frequencies

Shorter codes

Random codes

Ans – (3)

Explanation – Huffman coding assigns shorter codes to more frequent symbols.

Q9- What is the time complexity of the Huffman coding algorithm for building the Huffman tree?

O(n)

O(log n)

O(1)

O(n log n)

Ans – (4)

Explanation – The time complexity for building the Huffman tree is O(n log n).

Q10- In Huffman coding, what is the compression ratio?

Original size / Compressed size

Compressed size / Original size

Original size – Compressed size

Compressed size – Original size

Ans – (1)

Explanation – The compression ratio is calculated as the original size divided by the compressed size.

Q11- In which application does Huffman coding play a crucial role in reducing data size?

Real-time video streaming

Optical character recognition (OCR)

Image and video compression

Data encryption

Ans – (3 & 4)

Explanation – Huffman coding is commonly used for compressing images and videos.

Q12- What is the main advantage of Huffman coding in data compression?

Lossy compression

Random code assignment

Fast encoding and decoding

No compression

Ans – (3)

Explanation – Huffman coding allows for fast encoding and decoding of data, making it efficient for various applications.